Artificial Intelligence for Extended Reality (AI for XR)

AI for XR explores the intersection of artificial intelligence and immersive extended reality systems. Our research focuses on on-device AI—deploying and evaluating large language models (LLMs) directly on XR headsets. This approach enables real-time, private, and interactive AI applications without relying on cloud or server infrastructure. Our framework supports benchmarking across XR platforms in terms of inference speed, memory usage, and user experience. We are also developing vision-language models tailored for XR environments (VLMs for XR), which have numerous real-world applications, particularly in 3D scene understanding, perception, and natural multimodal interactions, with impactful use cases in fields such as healthcare, education, and beyond.

Below are some representative works in this direction.AIvaluateXR: An Evaluation Framework for on-Device AI in XR with Benchmarking Results

arXiv preprint, https://arxiv.org/abs/2502.15761, (2025)

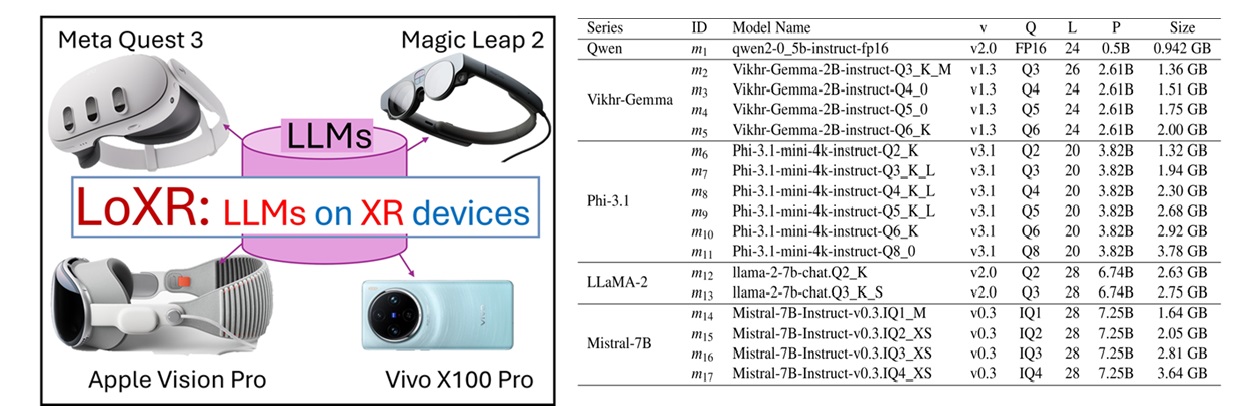

In this work, we present AIvaluateXR, a comprehensive evaluation framework for benchmarking LLMs running on XR devices. To demonstrate the framework, we deploy 17 selected LLMs across four XR platforms—Magic Leap 2, Meta Quest 3, Vivo X100s Pro, and Apple Vision Pro—and conduct an extensive evaluation.

LLMs on XR (LoXR): Performance Evaluation of LLMs Executed Locally on XR Devices

Xinyu Liu, Dawar Khan, Omar Mena, Donggang Jia, Alexandre Kouyoumdjian, Ivan Viola

IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), DOI: 10.1109/VRW66409.2025.00252

This paper presents LoXR, a performance evaluation of Large Language Models (LLMs) executed locally on XR devices.