AIvaluateXR: An Evaluation Framework for on-Device AI in XR with Benchmarking Results

Authors

Dawar Khan, Xinyu Liu, Omar Mena, Donggang Jia, Alexandre Kouyoumdjian, Ivan ViolaDescription

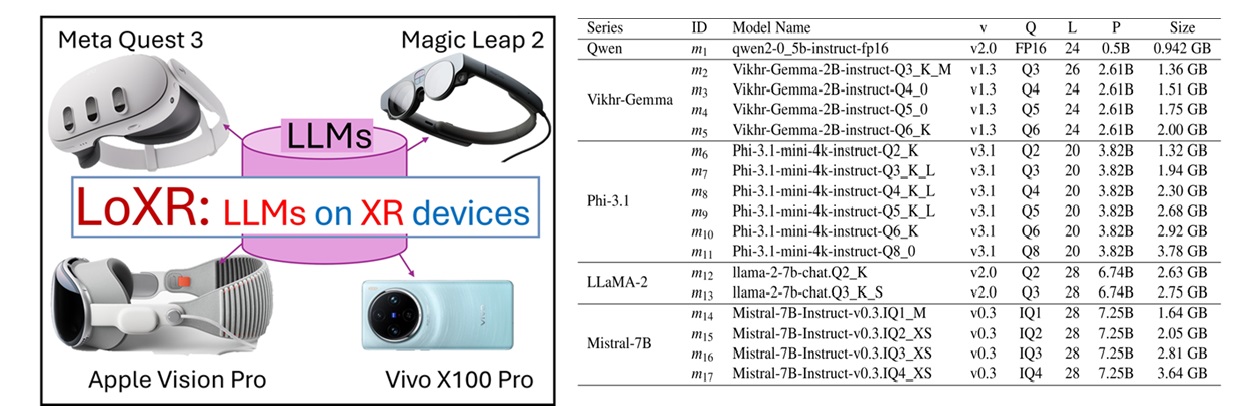

The deployment of large language models (LLMs) on extended reality (XR) devices has great potential to advance the field of human–AI interaction. However, selecting the appropriate model and device for specific tasks remains challenging when performing direct, on-device inference. In this work, we present AIvaluateXR, a comprehensive evaluation framework for benchmarking LLMs running on XR devices. To demonstrate the framework, we deploy 17 selected LLMs across four XR platforms—Magic Leap 2, Meta Quest 3, Vivo X100s Pro, and Apple Vision Pro—and conduct an extensive evaluation. Our experimental setup measures four key metrics: performance consistency, processing speed, memory usage, and battery consumption. For each of the 68 model–device pairs, we assess performance under varying string lengths, batch sizes, and thread counts, analyzing the tradeoffs for real-time XR applications. We also propose a unified evaluation method based on 3D Pareto Optimality to identify optimal device–model combinations based on quality and speed objectives. Additionally, we compare the efficiency of on-device LLMs with client–server and cloud-based setups, and evaluate their accuracy on two interactive tasks. We believe our findings offer valuable insights to guide future optimization efforts for LLM deployment on XR devices. Our evaluation method serves as standard groundwork for further research and development in this emerging field. The source code and supplementary materials are available on the following links.

Sources

- Source Code/Scripts

- Video Demo

- Poster at IEEE VR 2025

- Paper (arXiv Preprint)

- Supplementary Materials (PDF)